Introduction:

First things first, compliments of the season and I hope my readers stuck in the northern hemisphere are warm. In 2023 we witnessed a monumental transformation with the emergence of revolutionary technologies like Chat GPT, a Large Language Model (LLM) that exhibited exceptional performance across various applications. As the future unfolds, its potential impact is increasingly evident however certain sectors like education, healthcare, and enterprise have a cautious approach because of some inherent limitations of LLMs.

LLM's significant deficiencies become apparent. These models often generate inaccurate information and lack domain-specific knowledge, especially when faced with highly specialized queries or topics beyond their training scope. This limitation poses a considerable challenge when deploying generative artificial intelligence in real-world scenarios, as relying solely on a black-box large language model may prove inadequate. Conventionally, neural networks adapt to specific domains or proprietary information by fine-tuning models to encapsulate knowledge. While this method yields substantial results, it demands significant computational resources, incurs high costs, and necessitates specialized technical expertise, rendering it less adaptable to the evolving information landscape. Parametric knowledge and non-parametric knowledge play distinct roles in this context. Parametric knowledge is acquired through training large language models and is stored in the neural network weights, forming the basis for generated responses. On the other hand, non-parametric knowledge resides in external sources like vector databases, treated as updatable supplementary information not directly encoded into the model. This non-parametric knowledge empowers large language models to access and leverage the latest or domain-specific information, thereby enhancing the accuracy and relevance of responses. However, purely parameterized language models, storing their world knowledge in model parameters, face limitations. It is challenging to retain all knowledge from the training corpus, particularly for less common and specific information. Additionally, as model parameters cannot be dynamically updated, the parametric knowledge is susceptible to becoming outdated over time. The expansion of parameters further leads to increased computational expenses for both training and inference. To overcome these limitations, language models can embrace a semi-parameterized approach by integrating a non-parameterized corpus database with parameterized models. This approach is what we term Retrieval Augmented Generation (RAG). Retrieval Augmented Generation (RAG) significantly enhances the performance of Large Language Models (LLMs) by addressing inherent limitations and refining their responses. LLMs, while powerful, may fall short of providing the most relevant and accurate information, leading to potential errors or inaccuracies, especially in complex or domain-specific queries. RAG steps in to complement LLMs by incorporating external data sources through semantic search, ensuring contextually relevant and accurate responses. This collaboration is particularly valuable for applications requiring access to domain-specific or proprietary data, where LLMs may have limitations. RAG's semantic search capabilities, surpassing traditional keyword-based approaches, contribute to more nuanced and contextually appropriate responses. Furthermore, RAG introduces a temporal dimension through time-aware retrieval, facilitating the extraction of information within specific time and date ranges. Overall, RAG acts as a robust augmentation for LLMs, reducing errors, improving relevance, and enabling context-aware responses across a spectrum of applications.

The importance of Retrieval Augmented Generation (RAG) at the forefront of app development and its role as an indispensable tool for augmenting large language models (LLMs) is buttressed by the number of research papers released in this space this year. Its role is pivotal in elevating the capabilities of LLMs by incorporating external data sources, thereby enhancing responses with a focus on relevance and accuracy while mitigating errors or hallucinations. At its core, RAG operates through semantic search, delving beyond literal matches of search terms to discern the meaning behind user queries. This semantic understanding is particularly valuable for LLM apps requiring access to domain-specific or proprietary data, adding a layer of depth to the information retrieval process. Yet, the journey to building powerful and context-aware LLM apps may appear deceptively simple on the surface but is an extraordinarily challenging task. Crafting an intelligent ChatGPT-like tool integrated with a custom knowledge base involves orchestrating multiple non-trivial components. Merely relying on a basic vector database for retrieval proves insufficient; the complexity deepens as the system necessitates a nuanced semantic understanding of user queries. A robust RAG implementation demands more than just a conventional database; it requires a full-scale search engine to power the intricate retrieval process. This fusion of advanced language models and comprehensive search capabilities is essential for achieving the sophistication required to deliver meaningful and contextually rich responses in the realm of RAG applications. Most importantly stakeholders are faced with a crucial decision — whether to embrace existing third-party Large Language Models, exemplified by ChatGPT, or embark on the intricate journey of developing proprietary models. This pivotal decision prompts a thorough exploration of associated costs, benefits, and nuanced trade-offs between proprietary enterprise models and open-source LLMs. Even independent developers and hobbyists, despite their seemingly distant position from corporate decision-making, are intricately woven into this decision-making web. This necessitates a comprehensive examination of trade-offs, taking into account factors such as performance, ongoing expenses, risks, and beyond.

In the realm of Retrieval-Augmented Generation (RAG), a feature comparison unveils distinctive characteristics that influence performance and applicability. The approach to Knowledge Updates sets RAG apart, as it directly updates the retrieval knowledge base, ensuring information currency without the need for frequent retraining, making it suitable for dynamic data environments. In contrast, traditional models store static data, necessitating retraining for knowledge and data updates. When it comes to External Knowledge, RAG excels in utilizing external resources, especially in handling documents or other structured/unstructured databases. While traditional models can align externally learned knowledge from pretraining with large language models, they may be less practical for frequently changing data sources. Data Processing is another crucial aspect; RAG requires minimal data processing and handling, while traditional models rely on constructing high-quality datasets, where limited datasets may not yield significant performance improvements. In terms of Model Customization, RAG focuses on information retrieval and integrating external knowledge but may not fully customize model behavior or writing style. On the other hand, traditional models allow adjustments to behavior, writing style, or specific domain knowledge based on specific tones or terms. Interpretability is a notable strength of RAG, where answers can be traced back to specific data sources, providing higher interpretability and traceability. In contrast, traditional models, like black boxes, lack clarity on why the model reacts a certain way, resulting in relatively lower interpretability. Computational Resources are essential considerations; RAG requires resources for retrieval strategies, technologies related to databases, and maintenance of external data source integration and updates. Traditional models demand the preparation and curation of high-quality training datasets, the definition of fine-tuning objectives, and corresponding computational resources. Latency Requirements differ significantly; RAG involves data retrieval, potentially leading to higher latency, while traditional models, after fine-tuning, can respond without retrieval, resulting in lower latency. Addressing the critical aspect of Reducing Hallucinations, RAG is inherently less prone to hallucinations as each answer is grounded in retrieved evidence. Traditional models can help reduce hallucinations by training based on specific domain data but may still exhibit hallucinations when faced with unfamiliar input. Lastly, Ethical and Privacy Issues are pertinent; ethical concerns arise for RAG due to storing and retrieving text from external databases, while traditional models may encounter ethical and privacy concerns due to sensitive content in the training data.

To Buy or Build: A Complex Decision-Making Process for RAG Apps:

The decision to adopt AI technology, particularly in the context of developing Retrieval-Augmented Generation (RAG) apps, introduces a myriad of considerations. In highly regulated industries such as banking and healthcare, and for local deployments on devices, the stakes are undeniably high. Deploying advanced language models, akin to the considerations with ChatGPT, becomes a matter of both potential and concern for RAG apps. On one hand, the model's prowess in generating contextually rich responses is evident. On the other hand, complexities arise from its limited access to proprietary and sensitive data, especially when navigating through stringent regulatory landscapes demanding unwavering commitment to data protection. For developers of RAG apps, deploying sophisticated language models presents challenges that extend beyond mere compliance. It demands a fortified set of measures to prevent potential data leakage. Indie developers, with their unique use cases in the realm of RAG apps, further complicate the landscape. They grapple with challenges such as handling updates of applications and external dependencies or leveraging open-source LLMs locally for enhanced offline functionality. The aim is to achieve a seamless and uninterrupted user experience, particularly in scenarios where a continuous internet connection might not be guaranteed. In contrast, the world of Open-source Large Language Models (LLMs) is rapidly closing the performance gap. These models bring adaptability and fine-tunability to specific data sources, offering companies the potential to enhance performance in specialized contexts, a consideration that is especially pertinent for developers of RAG apps. The fine-tuning process, a hallmark of open-source models, empowers organizations to customize models to their precise needs and optimize them for unique requirements specific to RAG app development. The choice between open-source models and closed APIs from companies becomes a matter of control, transparency, and customization, with an essential note that while open-source models are free to use, the infrastructure to host and deploy them is not. Careful consideration of cost implications is necessary, given the resource-intensive nature of these models.

The current paradigm often involves the initial use of proprietary models until demand scales to a level where it becomes economical to host, deploy, and manage open-source LLMs on servers for RAG app development. The ever-evolving landscape of open-source development promises a reduction in model size, performance requirements, and more, presenting exciting possibilities for developers of RAG apps. Ultimately, the decision to build, focusing on opening up the black box and owning the algorithms that empower workflows in the domain of RAG apps, is driven by a forward-looking perspective, ensuring a nuanced approach to this complex decision-making process.

Benchmarking RAG Models: Navigating the Sea of Options

Benchmarking Large Language Models (LLMs) is a crucial step in the journey of selecting the optimal model for your Retrieval-Augmented Generation (RAG) app. The evaluation process involves navigating a complex sea of options, with standardized benchmarks serving as guiding lights. These benchmarks encompass a spectrum of tasks, from basic ones like sentiment analysis to intricate challenges such as coding via reasoning skills. While benchmark scores are often highlighted for marketing purposes, users must conduct hands-on experiments and assessments tailored to their specific use cases.

Caution should be exercised in interpreting benchmark metrics, as they can be manipulated. The true measure of a model's capability lies in practical experience, demanding rigorous testing before deployment in a production environment. Among the array of available models, a pragmatic approach involves relying on real-world tests and practical scenarios. The General Language Understanding Evaluation (GLUE) benchmark stands out as a comprehensive tool, amalgamating tasks from various datasets to assess a model's language understanding capabilities holistically. Its diverse set of language challenges aids in evaluating a model's generalization across different linguistic contexts and applications.

A high GLUE score indicates a language model's proficiency in diverse tasks, showcasing robust language understanding. This broad applicability is especially valuable for RAG apps and other AI-driven language tasks where comprehensive language comprehension is paramount. To delve deeper into LLM benchmarks, resources like the article [Insert Resource Link] provide valuable insights.

In the experimentation phase, it is vital to assess both the quality of suggestions and the processing speed of each model. Depending on your team's requirements, choosing a 13B or 34B parameter model may be preferable. However, considering factors like memory availability and processing capabilities, a 7B model might suffice in rare scenarios, allowing for local deployment using tools like Ollama, LM Studio, or LocalAI.

Once the ideal LLM for your RAG app is identified, the next crucial step is exploring deployment methods that align with your preferences and operational requirements.

RAG Model Deployment Options

The deployment of Large Language Models (LLMs) for Retrieval-Augmented Generation (RAG) apps entails navigating a plethora of options, each tailored to specific preferences and requirements. some companies like Together, and Hugging Face provide Inference Endpoints facilitating the operation of open-source code models. Text Generation Inference (TGI) provides an open-source toolkit optimized for fast inference, catering to models like Code Llama, Mistral, StarCoder, and Llama 2. vLLM, another option, offers a user-friendly library for LLM inference and serving, supporting models such as Code Llama, Mistral, StarCoder, and Llama 2.

SkyPilot, a versatile framework, simplifies cloud infrastructure for LLMs, ensuring cost savings, optimal GPU availability, and managed execution. AnyScale Private Endpoints offer a comprehensive LLM API solution within your cloud environment, aligning with the OpenAI API format. AWS provides various deployment options, including VM Instances, Sagemaker, and Bedrock. Azure presents options like VM Instances and Azure Machine Learning, while GCP offers VM Instances and Model Garden on Vertex AI. Despite the potential benefits of deploying LLMs, challenges arise, notably in the substantial costs involved. Costs vary based on factors such as model size, latency requirements, deployment methods, and memory selection. Careful consideration and baseline cost estimates are essential before optimizing deployment. Memory prerequisites depend on the chosen model and desired latency, emphasizing the need for GPU memory efficiency to serve the model and handle concurrent requests without compromising response times.

Navigating Challenges with Smaller RAG Models: A Nuanced Strategy

Deploying Retrieval-Augmented Generation (RAG) projects introduces a myriad of challenges, with variable costs emerging as a central concern. The factors contributing to these costs include model size, latency requirements, and the chosen deployment methods. One of the primary challenges lies in striking a balance between the sophistication of the RAG model and the practicality of deployment within budget constraints. The model size is a critical factor influencing deployment costs. Larger models, equipped with more parameters, often demand higher computational resources, storage capacity, and memory. As the model size increases, so does the need for robust infrastructure, impacting both the efficiency and cost-effectiveness of deployment. This necessitates careful consideration of the trade-offs between model complexity and the practicality of resource allocation.

Latency, the time it takes for the RAG model to generate responses, is another pivotal factor. In scenarios where real-time or near-real-time interaction is crucial, minimizing latency becomes imperative. Achieving low-latency deployments often involves investing in high-performance hardware, further contributing to the overall deployment costs. Striking the right balance between model complexity and response time is crucial for ensuring a seamless user experience.

The choice of deployment methods adds another layer of complexity. Various cloud platforms and services offer flexibility and scalability but come with associated costs. Self-hosting on cloud providers necessitates technologies optimized for prompt responses and scalability, further influencing the overall deployment expenses.

In navigating these challenges, the concept of using smaller, finely-tuned RAG models for specific sub-tasks within the app emerges as a strategic approach. This approach draws parallels to the early days of personal computing, where innovative solutions emerged despite hardware limitations. By breaking down complex tasks into smaller, more manageable components, developers can optimize models for specific facets of the overall RAG process.

This nuanced strategy acknowledges the inherent challenges of deploying large and resource-intensive models. Instead of relying on a monolithic and potentially cost-prohibitive approach, developers can adopt a modular and specialized model architecture. Each sub-task within the RAG app is addressed by a smaller model, finely tuned to excel in that particular aspect. This not only enhances the efficiency of the RAG system but also allows for more granular optimization of resources, mitigating the challenges associated with variable costs.

In essence, the concept of using smaller, finely-tuned RAG models mirrors the pragmatic solutions adopted in the early days of personal computing. It emphasizes resource efficiency, cost-effectiveness, and the ability to deliver meaningful results within the constraints of available technology. As the landscape of RAG-based projects evolves, this approach provides a nuanced and effective means of addressing the challenges posed by variable costs, contributing to the development of more scalable and sustainable RAG applications.

Tradeoffs: Inference Speed, Model Size and Performance

Speeding up inference for autoregressive language models, such as the plain autoregressive generate function, involves addressing both algorithmic and hardware-related challenges. Algorithmically, the generate function grapples with the need to process an expanding number of tokens with each cycle, resulting in a quadratic growth in computation. The autoregressive nature requires running the model on a cumulative context, significantly impacting inference time. Additionally, vanilla attention mechanisms exhibit quadratic complexity as all tokens attend to each other, contributing to an N² scaling issue. On the hardware front, the sheer size of language models, even relatively modest ones like GPT-2 with 117 million parameters, presents a challenge. These models, often hundreds of megabytes in size, strain RAM speed, requiring frequent loading of weights from memory during inference. Despite modern processors incorporating caches closer to the processor for faster access, the large model weights often do not fit in the cache, leading to substantial delays. Strategies for speeding up inference encompass various approaches. Model quantization, which reduces parameter precision, diminishes memory usage and enhances inference speed. Utilizing optimized frameworks like TensorFlow Lite or ONNX Runtime helps harness hardware capabilities more efficiently. Hardware acceleration, achieved through GPUs or TPUs, offloads computation from the CPU, although careful consideration of potential transfer bottlenecks is necessary. Parallelization techniques, such as model or data parallelism, can concurrently process inputs, enhancing overall throughput. Dynamic input shapes and caching intermediate results during the forward pass contribute to efficiency gains. However, these optimizations come with trade-offs. While batch processing improves throughput, it may increase the Time to First Token and generation latency. Accelerators like GPUs or TPUs can provide dramatic speedups but must contend with memory constraints and transfer bottlenecks. Compiler tools, like torch. compile, and optimize code for specific hardware, yet the balance between optimization gains and code readability must be struck. Caution is warranted with data-dependent control flow, as it can lead to graph breaks and impact compilation effectiveness. In essence, achieving faster inference in language models for production demands a nuanced understanding of both algorithmic intricacies and hardware optimization, with a keen awareness of the inherent trade-offs between speed and model output quality. several other strategies can be employed, including continuous batching, shrinking model weights, utilizing 16-bit floats, exploring even smaller quantization formats, and leveraging KV caching. Continuous batching involves passing multiple sequences to the model simultaneously, generating completions for each in a single forward pass. Padding sequences with filler tokens ensures equal length, and masking prevents padding tokens from influencing generation. This method efficiently utilizes model weights for multiple sequences, reducing overall inference time. Shrinking model weights involves quantization, where the model trained in a larger format, such as fp16, is quantized to smaller formats, saving memory and enhancing performance. Exploring 16-bit floats, specifically fp16 or bfloat16, offers significant memory savings, with trade-offs in precision and hardware support. Further reduction in parameter size can be achieved through quantization formats like those offered by the llama.cpp project, down to less than 5 bits per weight. However, extreme quantization may impact model performance, necessitating a balance between speed and inference quality. The use of KV caching, a technique within Transformers, involves generating a qkv matrix for tokens, performing attention calculations, and caching results for efficient reuse. These strategies collectively contribute to faster inference in autoregressive language models, with careful consideration of trade-offs to maintain optimal model output quality.

Conclusion

Opting for open-source AI in the deployment of Large Language Models (LLMs) within your Retrieval-Augmented Generation (RAG) application offers a compelling alternative to proprietary software, driven by two primary considerations:

Cost Considerations: The decision-making process is significantly influenced by cost considerations, particularly when comparing proprietary software to open-source alternatives like Llava and Llama. Proprietary solutions often come with a substantial financial burden, imposing hefty costs on users. In contrast, open-source models, coupled with affordable API services, provide a cost-effective avenue for integrating advanced language models into your RAG application. Platforms like Llava and Llama not only offer accessibility but also underscore the democratization of AI, making powerful language models available to a broader audience without the financial constraints associated with proprietary alternatives.

Censorship Challenges: The issue of censorship adds another layer of complexity when dealing with proprietary AI models. These models constantly face the challenge of mitigating undesirable outputs and potential misuse. For instance, GPT-4 exhibits variability in its capabilities due to ongoing efforts to prevent the generation of inappropriate content. This dynamic nature introduces challenges in accurately predicting the model's performance, as adjustments made to address one aspect may inadvertently impact others. The continuous struggle to balance preventing misuse with maintaining consistent performance highlights the inherent challenges and uncertainties tied to proprietary AI models. In contrast, open-source alternatives provide a more static and stable solution. Configured to specific requirements, they offer predictability and reliability, avoiding the unpredictable fluctuations associated with proprietary counterparts.

Choosing open-source AI, such as Llava and Llama, ensures a more static and stable solution that consistently delivers reliable outcomes once configured to meet specific requirements. Whether for personal use or web hosting, the predictability of open-source models ensures dependable performance over time.

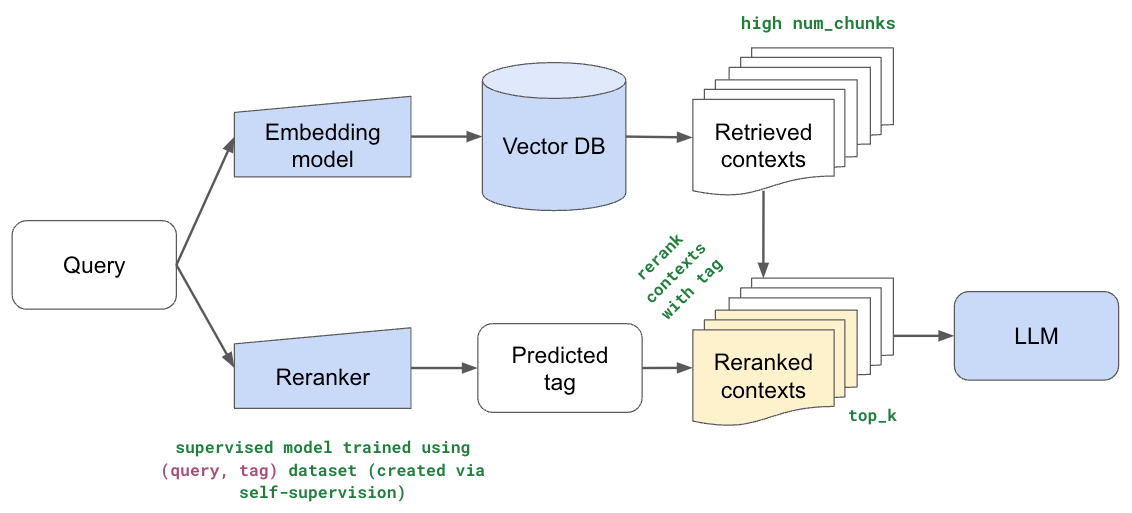

In the next article, we will go over some approaches to RAG this paper is a goldmine for research on this topic. If you find yourself navigating the complexities of open-source AI and seek guidance, feel free to reach out. I'm here to provide personalized support and expertise to help you make informed decisions tailored to your unique needs. Contact me for assistance in exploring the vast landscape of open-source AI.